Introduction

Data Mining, otherwise known as Knowledge Discovery in Databases (KDD), refers to the process of discovering interesting patterns, trends, anomalies, and other valuable knowledge from a large repository of data. Data mining uses statistical and mathematical techniques to extract useful patterns and knowledge that exist in data. The different data sources include databases, data warehouses, information repositories, the web, etc. There are various methods used for Data Mining such as association rules, clustering, classification, prediction, sequential patterns, and regression.

This article focuses primarily on Apriori and Eclat algorithms, a famous data mining algorithm to generate association rules. We will first begin with the definition of association rule mining, and then understand the concepts of the apriori and eclat algorithms, its R implementations, and finally end with the advantages and disadvantages of the Apriori algorithm.

Assocation Rule Mining

Association rule mining is a rule-based data mining technique to identify associations, correlations, and interesting patterns in large databases. The main idea is to find relationships between the data in the dataset and to discover rules.

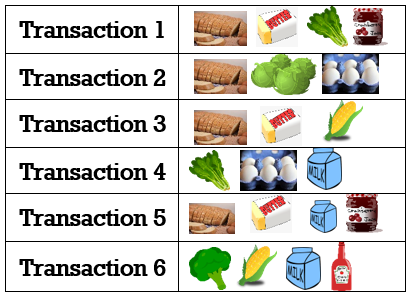

The most common and popular application of association rule mining is market basket analysis, where the database containing customer transactions is mined to discover the buying patterns. The generated association rules define customers' behaviour in buying items, i.e., it identifies the relationship between the different items that customers often buy together.

Association Rules

Association rule mining is a conventional and rule-based method for determining interesting relations between attributes in large databases. It is intended to discover all hidden associations or patterns that satisfy some user-predefined criteria.

Association rules are simple If/Then statements that help discover relationships between the data in the data set. An association rule will be of the form {A => B}, where A and B define a set of attributes referred to as Antecedent and Consequent, respectively.

Antecedent: The ‘If’ part is referred to as antecedent which is an item or set of items that is found in data. It forms the Left Hand Side (LHS) of the rule.

Consequent: The 'Then' part is referred to as consequent which is an item or set of items that is likely to found in combination with the antecedent. It forms the Right Hand Side (RHS) of the rule.

The above example states that, if Bread and Butter are bought then, the customers are also likely to buy Jam. Also, both the antecedent and consequent are disjoint in nature. The following measures are used to select potentially relevant rules in all possible rules.

Rule Evaluation Metrics

Support and Minimum Support

The support for a rule (A=>B) indicates how frequently the antecedent and the consequent (If/Then) appear together in the dataset.

The minimum support is a user-specified threshold value that acts as a minimum criterion that will be used to exclude or filter rules in the result that have a support value less than the given minimum support.

Mathematically, we can define support as:

Confidence and Minimum Confidence :

The Confidence for a rule (A=>B) indicates the number of times a given rule is found to be true. It measures the reliability of the inference made by an association rule. The values of confidence range between 0 and 1.

The minimum confidence is a user-specified threshold value that acts as a minimum criterion that will be used to prune or filter uninteresting rules in the result that have a confidence value less than the given minimum confidence.

Mathematically, we can define support as:

The association rule mining can be viewed as a two step process

- Find Frequent Itemsets : An Itemset is said to be frequent if it satisfies the predetermined minimum support threshold value. That is, Itemsets whose support value is greater than or equal to the minimum support.

- Generate strong association rules from the frequent itemsets : Association rules are said to be strong if it complies with both the minimum threshold values of support and confidence.

Types of Association Rule Mining Algorithm

Association rule mining can be implemented using three algorithm. They are

- Apriori

- Eclat

- FP - Growth

Apriori

The Apriori algorithm is a widely used algorithm for mining frequent itemsets and discovering potential association rules from the given dataset. It is considered an influential unsupervised learning approach since it is used to mine hidden patterns or associations in the given dataset without any target variable for the model.

Apriori algorithm employs breadth first search strategy to discover frequent itemsets. It is based on an important property that states that

All non-empty subsets of a frequent itemset must also be frequent. In other words, if an itemset is infrequent, then all its superset are also infrequent.

How Apriori works

- Find all Frequent Itemsets

- Join step : This step is also known as candidate generation which generates (K+1) itemsets from K- Itemsets by joining with itself. For example, 1-itemset {{a},{b},{c}} is made to self join with itself to form 2-itemset {a,b},{a,c},{b,c}.

- Prune step : During this step, the Antimonotone property is applied to reduce the search space of candidate itemsets. As per the antimonotone property, if the support value of an itemset is less than the minimum support threshold, then all of its supersets will also be less than the minimum support. Thus, it can be pruned.

- Generate strong association rules : Using the frequent itemsets, select rules which satisfies minimum support and minimum confidence threshold. Such rules are called strong rules.

Eclat

Eclat stands for Equivalence Class Clustering and bottom-up Lattice Traversal, and it is a popular algorithm for mining frequent if-then patterns in the dataset. Eclat algorithm uses a Depth First Search approach to discover rules, which is usually faster than a Breadth First Search. The use of the DFS strategy consumes less memory than Apriori.

Eclat algorithm can perform mining only on vertical transactional databases. Even if the database is in horizontal format, we need to transform it into vertical format for mining. This vertical approach makes Eclat execute faster compared to Apriori, which uses a horizontal data format for generating frequent itemsets. Another advantage of using vertical dataset is that it avoids repeated scanning of the dataset to calculate the support value. Eclat only deals with support metric for mining itemsets.

Apriori Algorithm Implementation in R Language

Dataset Description

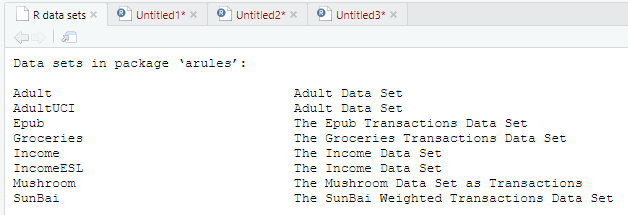

The dataset used here is the "groceries" dataset which is available as pre-loaded in R. Run data() command from the terminal to view list of built-in datasets.

The package "arules" in R provides infrastructure for analyzing transactional data using frequent itemsets and association rules. Similarly, the other packages like "arulesviz", "coefplot", "ggplot2" and "plotly" are used to visualize the generated rules in a graphical way. To Install and load the packages, run the following code fron the terminal.

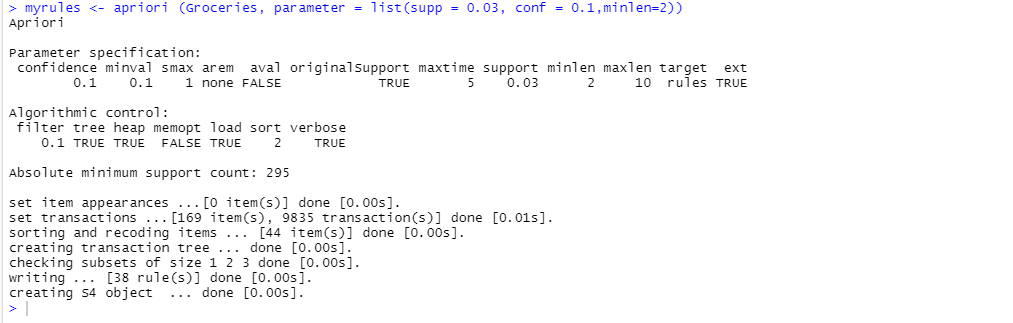

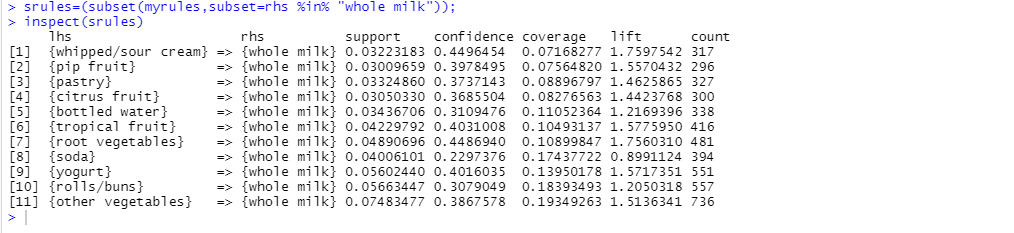

Apply apriori() function

The function "apriori()"is an in-built function in R which takes transactional dataset and parameters list as input and generates association rules as output.

Parameter list

1. Support (supp) : Minimum support threshold.

2. Confidence (conf) : Minimum confidence thereshold.

3. Minimum length (minlen) : Minimum length of the rules.

4. Maximum length (maxlen) : Maximum length of the rules.

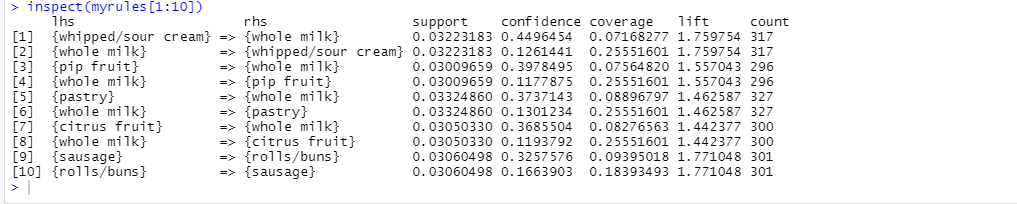

Apply subset() function

The function "subset()" is used to filter rules that matches with the expression given in the subset.

# To select only rules with rhs = "Whole milk"srules=(subset(myrules,subset=rhs %in% "whole milk"));inspect(srules)

Visualize the rules

# Visual representation of rules

plot(srules,jitter=0)

plot(srules,method="two-key plot")

plot(srules,method="graph", engine = "htmlwidget")

plot(srules,engine="plotly")

Eclat Algorithm Implementation in R Language

# To install packagesinstall.package("arules")install.package("arulesviz")install.package("plotly")install.package("ggplot")install.package("coefplot")

# To load installed packageslibrary("arules")library("arulesViz")library("plotly")library("ggplot2")library("coefplot")

# To import "Groceries" datasetdata("Groceries")

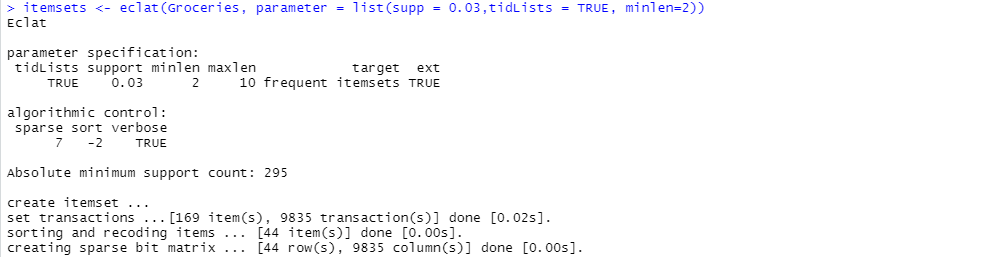

Apply eclat() function

The eclat() function returns an object of class itemsets as output. This algorithm uses simple intersection operations for equivalence class clustering along with bottom-up lattice traversal.

# Use eclat to find itemsetsitemsets <- eclat (Groceries, parameter = list(supp = 0.03, tidLists = TRUE, minlen = 2))

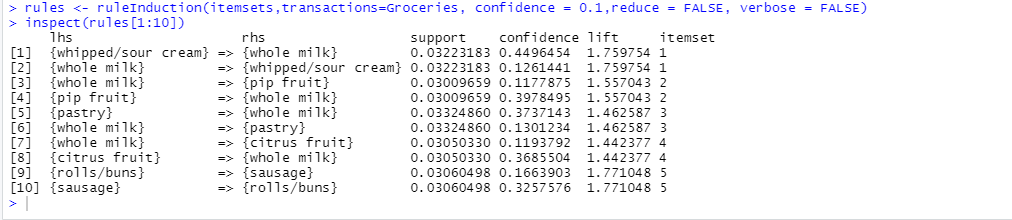

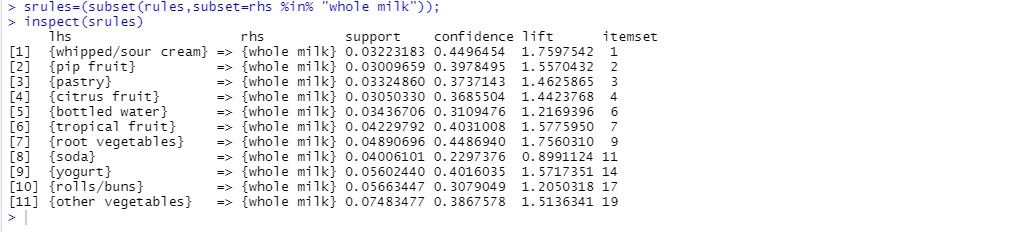

Apply ruleInduction() function

The ruleInduction() function can be used to generate association rules from the found itemsets.

# ruleInduction to generate rules from found itemsetsrules <- ruleInduction(itemsets,transactions=Groceries, confidence = 0.1,reduce = FALSE, verbose = FALSE)

# To view generated rulesinspect(rules)

# To select only rules with rhs = "Whole milk"srules=(subset(rules,subset=rhs %in% "whole milk"));inspect(srules)

Visualize the rules

plot(srules,method="two-key plot")

plot(srules,method="graph", engine = "htmlwidget")

plot(srules,engine="plotly")

0 Comments